Run TypeScript & Deno Natively in AWS Lambda

I’m not going to talk about why you would want to run Deno instead of Node, or TypeScript instead of JavaScript. Instead, I’m just going to show you how I did it natively in AWS Lambda without Docker images, third-party libraries or changing my code!

But first, I need to talk about how I didn’t do it so I can introduce you to some important concepts.

🙅♂️ Custom Runtime

At re:Invent 2018, AWS announced Custom Runtimes as a way to bring your own programming language to Lambda. There are plenty of these now. AWS even have a guide on how to make one for PHP.

Custom Runtimes seem simple on the surface. You need three things:

- The program that runs your code. In our case, that’s

deno. - An executable

bootstrapfile included in your function code. - A processing loop implementation (I’ll explain what this below).

The first requirement can be satisfied by including the program in your function code, but that’s not ideal and may not be possible due to the size of it. Function code packages (zip files) can be up to 50MB. You could solve this by using a Docker image, but let’s not. Another option is to put it in a Lambda layer where the size limit is 250MB and you get nice reusability, even between accounts. I like this option.

Let’s assume you put deno in a zip file and created a layer from it. Layers get unzipped to /opt so now you have /opt/deno ready to run.

In your function configuration, you set Runtime to provided instead of something like nodejs22.x or python3.13. This tells Lambda that you’re providing the runtime, and to expect an executable file in your function package named bootstrap.

bootstrap is responsible for starting your processing loop. But what is a processing loop?

AWS Lambda Processing Loop

Let’s take a quick detour and talk about this. By now, most people know that Lambda functions run in containers and sleep between invocations. But how does the JSON payload you sent during an Invoke actually get to your handler code? That’s the processing loop!

The processing loop is an infinite loop. In its simplest form, each iteration starts by calling the Lambda’s Runtime API’s /runtime/invocation/next endpoint to get details of the invocation it’s supposed to process. Those details include the payload and other things like AwsRequestId, temporary IAM credentials, X-Ray trace ID, etc.

The processing loop packages all of that into an event and a context and calls the handler function. It knows where it the handler function is from the _HANDLER environment variable Lambda set based on your configuration.

If the handler returns successfully, the loop must report the result to /runtime/invocation/AwsRequestId/response. If there was an error, that needs to be reported to /runtime/invocation/AwsRequestId/error. Either way, as soon as one of those calls is made, the container is frozen.

When there’s another invocation to process, the container is unfrozen. It’s still at the same place in its processing loop (the end of it). It loops around and calls the API to get the next invocation. The cycle repeats.

Before entering the loop, your custom runtime will likely check the handler in _HANDLER actually exists, it might validate some config, or do other things. If any of that goes wrong, it can be reported to Lambda via /runtime/init/error.

Back to bootstrap

The first thing a new container does (as far as we mortals can see) is execute a “bootstrap” file.

When Runtime is set to provided, the bootstrap file in your code package is executed. When Runtime is set to anything else, the AWS file at /var/runtime/bootstrap is executed. The Node runtime’s bootstrap is a shell script that boils down to starting the node process, pointing it at a JavaScript file that contains the processing loop AWS engineers implemented.

In the case of the PHP example I mentioned above, they added the #!/opt/bin/php shebang to the top of their bootstrap file. This says which program to use to interpret the rest of the file. In this case, /opt/bin/php exists because it’s part of a Layer. The rest of the bootstrap file contains a very crude processing loop.

In reality, a robust processing loop is actually quite complex due to things like error handling, X-Ray tracing, telemetry, logging, response streaming, etc. In my opinion, writing a processing loop should be avoided if possible.

🙅♂️ Deno’s documented way

I found a page on the Deno documentation titled How to Deploy Deno to AWS Lambda. This uses AWS Lambda Web Adapter from AWS Labs. I’ll briefly describe what that library does.

AWS Lambda Web Adapter comes is a Docker image that you use as a base to build your own image. It contains a processing loop written in Rust which handles all the Runtime API interaction for you. All you have to do is run a web server inside the Docker container and the Web Adapter will call your web server once for each request.

Maybe this is appealing to you but not to me. It adds significant overhead and complexity, and build times are going to be much, much slower than a simple zip file. I haven’t actually tried it but I suspect the cold start times are not great.

🙆♂️Reuse the AWS engineers hard work

I wanted a solution that didn’t involve me changing my code or writing any new code. I don’t want to use a Custom Runtime. I want to benefit from the years of work the AWS engineers have put into perfecting their processing loop. I also want to automatically get any new features they add to it.

Therefore, I left my function’s Runtime set to node22.x and instead I made use of another Lambda feature called wrapper scripts.

Wrapper scripts are executable files that you include somewhere in the Lambda environment. Again, this could be in your code package or a Layer. You then tell Lambda where it is using the AWS_LAMBDA_EXEC_WRAPPER environment variable.

In the case of the Node runtimes, the AWS-supplied bootstrap script does its normal work and right before it starts node, it checks if you’ve set that environment variable and if the file exists. If so, instead of executing node, it executes your wrapper script file, passing it all of the arguments it would have given to node. Your script is supposed to do something then continue with executing node. For example, it might add some more arguments.

I created a Lambda layer that contains the deno program. Downloading Deno is fairly simple. You can discover the latest version number using this URL format:

https://dl.deno.land/release/${deno_version}/deno-${target}.zip

${deno_version} can be the latest version number from https://dl.deno.land/release-latest.txt such as v2.1.7.

For Lambda, ${target} is either aarch64-unknown-linux-gnu or x86_64-unknown-linux-gnu depending on whether you’re using the arm64 or x86_64 architecture, respectively.

In my case, the URL was https://dl.deno.land/release/v2.1.7/deno-x86_64-unknown-linux-gnu.zip. These files are just stored in S3.

In the zip file, I put the deno program into a folder called bin so that it would end up at /opt/bin/deno. The folder doesn’t actually matter, but it seems like a nice convention.

🦕 Making Deno happy

With this Lambda layer, we now have /opt/bin/deno in the Lambda environment, ready to execute the AWS-written processing loop that is present when your Runtime is set to Node. That’s located at /var/runtime/index.mjs in case you’re wondering.

The final piece is gluing it all together with that wrapper script. My wrapper script looks like this:

#!/bin/sh

unset _LAMBDA_TELEMETRY_LOG_FD

exec /opt/bin/deno run \

--allow-all \

--unstable-bare-node-builtins \

--unstable-node-globals \

/var/runtime/index.mjsLambda executes the Node runtime’s bootstrap, which executes my wrapper script before node, and my wrapper runs /opt/bin/deno. I included my wrapper script in my zip as wrapper with deno, so AWS_LAMBDA_EXEC_WRAPPER is set to /opt/bin/wrapper for me.

Did it work the first time? No. Of course not.

I had to unset the _LAMBDA_TELEMETRY_LOG_FD environment variable. This normally contains a file descriptor ID of where the processing loop should write some telemetry. For me it was 62 for me, but I’m not sure if it always is. Apparently, unlike Node, Deno doesn’t like writing to arbritrary file descriptors that it didn’t open itself. Unsetting the environment variable disables this telemetry functionality.

I’m fairly new to Deno, so I didn’t even know that Deno runs quite locked down by default.

Deno is secure by default. Unless you specifically enable it, a program run with Deno has no access to sensitive APIs, such as file system access, network connectivity, or environment access.

This is a problem for a couple of reasons. Deno needs --allow-read to read other files such as the function handler. It also needs --allow-ffi because the AWS processing loop defaults to using a native module to talk to the Runtime API. I already forgot if it needed any more, but I decided that since the Node runs with insecure, Deno can to, so I set --allow-all.

--unstable-bare-node-builtins is set because even though Deno has great Node compatability these days, it wants you to prefix imports of Node APIs with node:. For example, fs becomes node:fs. I originally started going down the path of using Regex to replace imports/requires to make them compatible, but instead I found this argument which allows bare (no node: prefix) imports. It does give a warning the first time, but whatever.

Lastly is --unstable-node-globals, which enables using things like process without having to import node:process. This is important because environment variables are used a lot via process.env whereas Deno uses Deno.env.get and Deno.env.set for whatever reason. Again, I started Regex replacing these but I gave up and found this.

That’s it! Really, that’s all it took and now my code runs. How well it works for real code depends on Deno’s Node compatability, which is claims is excellent but I understand it isn’t 100%. You probably want to use Deno APIs if you’re doing this though.

⛓️💥 Room for improvement

In my wrapper script, I’m just calling deno without any of the arguments Node is usually given. When the original bootstrap calls Node, it sets arguments relating to memory heap sizes, maximum HTTP header size, certificate authorities (if needed), garbage collection, etc. I’m not sure if Deno supports all of the same options or similar ones, but it’d be good to do the same tuning if possible.

📊Performance

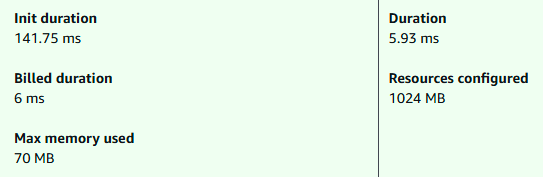

Let’s look at cold start performance. At 1024MB of memory, the pair look pretty much the same. I did this experiment because Deno is supposed to have a much faster cold start time compared to Node. I suspect that doesn’t kick in until you have more code running in the function than my test did.

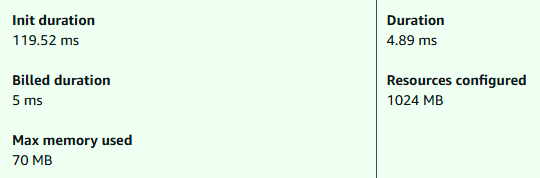

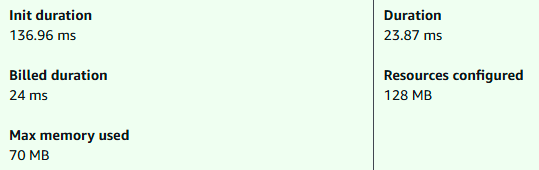

On 128MB of memory, the results were quite different.

It’s possible that the lack of memory tuning arguments is the sole cause of this. It could also be that AWS has a custom Node build that is more suitable for the ephemeral Lambda environment. I’m not sure.

After the cold start, on subsequent invocations, they both went down to single digit milliseconds on 1024MB. However, on 128MB, Node went down to single digits very quickly, while Deno took a few more requests to get there (there were a couple of 70ms invocations first). Weird.

🌀 TypeScript

TypeScript is a first class language in Deno, just like JavaScript. You can run or import TypeScript without installing anything.

This is something I want. Unfortunately, /var/runtime/index.mjs and its UserFunction class are specifically looking for handler files ending in .js, .mjs or .cjs when trying to import your handler. You configure something like index.handler and it looks for handler, handler.js, handler.mjs or handler.cjs. Deno only compiles a file as TypeScript if the exstension is .ts (or a variant). Otherwise it treats it as plain JavaScript and throws errors.

But I won’t be beaten!

I modified my bootstrap script to solve this problem:

#!/bin/sh

# 1

mkdir -p /tmp/runtime

cp /var/runtime/index.mjs /tmp/runtime/index.mjs

sed -i 's/_tryRequireFile(lambdaStylePath, ".cjs")/_tryRequireFile(lambdaStylePath, ".ts")/g' /tmp/runtime/index.mjs

# 2

for item in /var/runtime/*; do

[ "$item" = "/var/runtime/index.mjs" ] && continue

basename=$(basename "$item")

ln -s "$item" "/tmp/runtime/$basename"

done

unset _LAMBDA_TELEMETRY_LOG_FD

exec /opt/bin/deno run \

--allow-all \

--unstable-bare-node-builtins \

--unstable-node-globals \

/tmp/runtime/index.mjsThere are three changes. #1 is copying index.mjs to /tmp so it can be modified (/var/runtime is read-only). It then does a simple substitution of the part of the code which would attempt to load index.cjs with index.ts. This wouldn’t affect your function code. It just patches the runtime to also look for TS files.

At the bottom of the script, I’m now telling deno to run /tmp/runtime/index.mjs. There are other files and folders in /var/runtime, so #2 is creating symlink in /tmp/runtime back to the originals.

I renamed index.js to index.ts and voila, we now have fully working TypeScript support! I suspect this added microseconds to the cold start time.

Conclusion

To switch from Node & JavaScript to Deno & TypeScript with this approach, it requires that you configure a Layer and one environment variable. No changes to your code. No Docker. No third-party libraries. I think that’s really cool!

For more like this, please follow me on Medium, Twitter, and Bluesky.