Looking at LambdaShell.com after 3+ years

lambdashell.com was registered on July 26th, 2018 and hosts a website made by Caleb Sima, who has been Chief Security Officer at stock-trading app Robinhood since February 2021.

The website has one page that asks the question “Is serverless insecure?” alongside the following challenge:

This is a simple AWS lambda function that does a straight exec. Essentially giving you a shell directly in my AWS infrastructure to just run your commands. A security teams worst nightmare.

Do whatever you want. Ultimate goal: take over the account, escalate privs or find some sensitive info.

Configured with all default permissions and settings. This service will sit for a bit and if nothing interesting happens it will be reconfigured very insecurely to see what happens.

$1,000 Bounty. Found something? Let me know at root@lambdashell.com

Below these messages is the interactive “Lambda Shell” followed by a table that displays how many times the most popular commands have been tried.

Terminal

Lambda Shell uses jQuery Terminal to accept commands, POST them to https://api.lambdashell.com/, and display the result.

The POST request body is a JSON object with a command property. The response is also a JSON object, this time with a result property.

For example:

Request: {“command”:”pwd”} → {“result”:”/var/task\n”}Response: {“command”:”ls”} → {“result”:”index.js\n”}

Unsurprisingly, since we’re making HTTP requests that result in a Lambda function invocation, there are API Gateway headers in the response. Slightly more interesting is that there are both CloudFront and Cloudflare headers.

Functions

Lambda function user code lives in /var/task. From the screenshot above, we know there is a lone index.js file in there, so it’s a Node.js function. We can confirm which Node.js version is used by inspecting the AWS_EXECUTION_ENV environment variable.

user@host:~ echo $AWS_EXECUTION_ENV

AWS_Lambda_nodejs12.xWe can also echo $_HANDLER and use its value (index.handler) to confirm that the handler function inside index.js is the entry point.

The function’s JavaScript code can be read by running cat index.js (the working directory is already /var/task).

The handler code logs the input event, then defines two functions; one named fun and another named exec. Lastly, it calls fun, passing in the input event and a callback named response.

fun synchronously invokes another Lambda function named filter, forwarding it the input event. When filter returns, fun calls the response callback, passing it filter’s error or response payload.

In the callback, if filter returned 'true', then the exec function is called, otherwise an empty response is returned to the client.

exec uses child_process.execSync() to execute the command we sent in via event.body.command. This function returns the stdout from the command, which is logged and returned to the client. Similary, if an error is thrown, it’s logged and returned.

Aside: The Node.js docs for

execSyncsay “Never pass unsanitized user input to this function. Any input containing shell metacharacters may be used to trigger arbitrary command execution”. I found this amusing since the whole point of Lambda Shell is to allow arbitrary command execution.

Permissions

According to the Wayback Machine’s 18 August 2018 capture, Lambda Shell’s challenge message hasn’t changed one bit. It said back then, and still says now, that the execution role is “configured with all default permissions and settings”.

Lambda doesn’t really have default permissions per se. If you create a function using the API or CloudFormation, you must create and provide an execution role yourself.

However, if you use the console and select “Create a new role with basic Lambda permissions”, then Lambda will create an IAM role for you. Today, if you create an us-east-1 based function named example in AWS account 123456789012, the role will contain this policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "logs:CreateLogGroup",

"Resource": "arn:aws:logs:us-east-1:123456789012:*"

},

{

"Effect": "Allow",

"Action": [

"logs:CreateLogStream",

"logs:PutLogEvents"

],

"Resource": [

"arn:aws:logs:us-east-1:123456789012:log-group:/aws/lambda/example:*"

]

}

]

}On October 25, 2018, Ory Segal published Securing Serverless: Attacking an AWS Account via a Lambda Function. Ory’s post details how he had found and exploited Lambda Shell having the s3:ListBucket, s3:DeleteObject, and s3:PutObject permissions. According to his screenshots, he deleted Lambda Shell’s index.html file on August 8th which took the site down for a few days.

We know the execution role has lambda:Invoke on (at least) the filter function, and there’s nothing about S3 in the default policy, so clearly the Lambda Shell execution role didn’t have default permissions back then.

What does it have now?

To investigate further, it’ll be easier use the AWS CLI locally.

Lambda supplies functions with temporary credentials via environment variables, so we just need to read those.

user@host:~ export | awk '/ACCESS|SECRET|REGION|TOKEN/'

export AWS_ACCESS_KEY_ID="ASIARZMXIAFTJE4ZPUFD"

export AWS_DEFAULT_REGION="us-west-1"

export AWS_REGION="us-west-1"

export AWS_SECRET_ACCESS_KEY="2EpQgqqOQOF1+W...doNkkRDqJklWCV9"

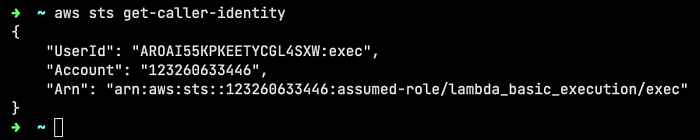

export AWS_SESSION_TOKEN="IQoJb3JpZ2luX2VjE...Yyd/fKl8zzP3ZnQA=="We can test everything works using get-caller-identity. We also see the Lambda function is named exec, which we could have found out by inspecting the AWS_LAMBDA_FUNCTION_NAME environment variable.

Let’s check if the S3 permissions Ory discovered have been removed.

s3:ListBucket is still there, but s3:PutObject and s3:DeleteObject have been removed.

Lambda

We know we have permission invoke the filter function. Invoking it ourselves shows than that filter is configured with 128 MB of memory and returns an error.

The error is because filter tries to access a property named source-ip inside another property which isn’t defined on the input (that makes sense as I didn’t even provide an input).

Given that exec is just passing through its own input, source-ip isn’t on the API Gateway proxy event, and exec is accessing event.body.command without first running JSON.parse, this suggests there’s some API Gateway template mapping going on.

What else can we invoke? What about exec itself?

Yes, we can invoke exec. I didn’t pass a payload again and its expecting something like { body: { command: “ls" } }, so we get an error when it tries to read command from body. We can see exec also has 128 MB of memory, and it has X-Ray active tracing enabled (notice the XRAY TraceId on the last line).

It makes sense to have lambda:Invoke for filter, but why exec? Maybe the IAM role just isn’t locked down to any particular function. The “Commands Tried” list on lambdashell.com is dynamically populated from a simple GET of https://api.lambdashell.com/top-commands. Since Caleb seems to have used a simple function naming convention, lets try guessing function names.

I got it on the first guess. The output almost looks like an error, but it’s actually just the tail end of the response JSON array being logged to the console. The very last log message is INFO redis client connection closed. It’s reasonable to assume the data is stored in Amazon ElastiCache for Redis.

Redis

ElastiCache clusters are created inside a VPC, so the top-commands function must be attached to that VPC. To connect to a VPC, the execution role the ec2:CreateNetworkInterface, ec2:DescribeNetworkInterfaces, and ec2:DeleteNetworkInterface permissions. Are all the functions sharing an execution role?

Nope, doesn’t look like it. I don’t think there is anything we can do with this knowledge then.

API Gateway

I didn’t mention it at the start, but the website has another table called “Issues Found” that lists a few issues discovered by previous explorers, including Ory.

This table is also populated dynamically, but this time the URL is https://yypnj3yzaa.execute-api.us-west-1.amazonaws.com/dev//issues. If I change /issues to /top-commands, I get the same result as before, so this is probably the direct API Gateway URL for api.lambdashell.com.

api.lambdashell.com goes through Cloudflare, so this knowledge can be used to avoid any sort of protection Cloudflare is adding. However, since we can invoke the functions directly using the Lambda API, that protection isn’t doing much.

Aside: CDN77’s TLS Checker says Cloudflare supports TLS 1.3 whereas API Gateway doesn’t. Both have TLS 1.0 & 1.1 enabled.

X-Ray

Enabling X-Ray active tracing requires the execution role to have the xray:PutTraceSegments and xray:PutTelemetryRecords permissions.

To try this out quickly, we can use the value of _X_AMZN_TRACE_ID.

user@host:~ echo $_X_AMZN_TRACE_ID

Root=1-61c329d4-6bc7e7421dee02f5235e9cc4;Parent=67bab5d2e727bc1d;Sampled=1This environment variable will change every time you run the command (i.e. every invocation).

We know it worked because UnprocessedTraceSegments is empty…

Logs

The basic permissions we expect to be in the role are logs:CreateLogGroup, logs:CreateLogStream, and logs:PutLogEvents.

I have a blog post on Lambda logging if you’re interested in a deeper understanding of how these fit together and are used by Lambda.

If I test these permissions I find that they all work.

In fact, they work better than they should. According to the resource lists in the default execution role Lambda creates today, I should have been able to create that log group, but not create a log stream or events within it.

The default policy locks the latter two operations down to log groups prefixed with the function name. It also restricts all three actions to the same AWS region as the function.

It looks like we have another *, just like lambda:Invoke probably has.

Denial of service

With the information gathered above, we can avoid Cloudflare’s rate limiting (assuming its enabled) by calling API Gateway directly, and we can avoid API Gateway’s rate limiting (again, assuming its enabled) by invoking the Lambda functions directly. A simple denial of service attack can be performed by overloading either the exec or filter function with requests.

By default, AWS accounts have 1,000 concurrency shared between all functions. How do we consume concurrency? The easiest way I can think of is to use the sleep command to just do nothing for up to 16 minutes. Since functions can run for 15 minutes, each sleep would consume 1 concurrency.

user@host:~ sleep 16m

2021-12-22T14:41:40.843Z cef17277-fb37-4f18-a10d-edeb969ad426 Task timed out after 10.01 secondsOf course, a 15 minute timeout on this function would be overkill, and it looks like Caleb went with 10 seconds. Therefore we just have to run 1,000 parallel sleep 10s invocations every 10 seconds. Or do we?

Individual per-function concurrency limits were released in November 2017, well before this project went live, so it’s logical that exec would have a concurrency limit set. exec's limit can be determined via brute force by running sleep 9s && echo $AWS_LAMBDA_LOG_STREAM_NAME many times in parallel until Lambda starts returning throttling errors. The number of unique log stream names can then be counted. In this case, I determined exec has a reserved concurrency of 10 (humans like the number 10, don’t they?).

So, for a simple denial of service attack, we’d run at least 10 sleep 10s in parallel every 10 seconds. There are tools for that, but just to try it out we can use a for loop and just do 10 sleeps for 10 seconds.

for i in {1..10}; do aws lambda invoke --function-name exec \

--payload '{"body":{"command":"sleep 10s"}}' \

--cli-binary-format raw-in-base64-out /dev/null & doneWhile this command is running, lambdashell.com responds with 500 errors and the jQuery Terminal just displays a blank output.

Spend

AWS famously has no way of limiting spend to a chosen dollar amount. You have to use a combination of service quotas, user configurable limits (like throttling and concurrency), alarms, and reports to know when things are getting expensive.

Spend attacks are interesting because its not immediately obvious why an attacker would bother. However, doing this to a small competitor or individual could be ruinous for them.

Let’s take a look at how expensive the limited permissions we have can be.

Note: These calculations assume perfect conditions and gloss over some nuance.

Lambda

In us-west-1, where Lambda Shell runs (according to the $AWS_REGION env var), invocations cost $0.20 per 1M, and $0.0000000021 per millisecond (the price for 128 MB of memory). In terms of our 10 second sleep, that’s $0.0000212 per invocation, or $21.20 per million invocations.

exec has a concurrency limit of 10, so it would take ~11.5 days to do 1 million invocations, so this isn’t much of a risk.

Another cost that’s easy to forget is data transfer. Data transfer out of us-west-1 to the Internet costs between $0.09 and $0.05 per GB depending on volume.

Data transferred “in” to and “out” of your AWS Lambda functions, from outside the region the function executed, will be charged at the Amazon EC2 data transfer rates as listed under “Data transfer”.

top-commands has the largest output (an array of the top 1,000 commands and how often they’ve been used). It also doesn’t appear to have a concurrency limit. Cloudflare compresses top-commands’s response using Brotli, but when its accessed directly from API Gateway, we see the response body is currently an uncompressed 29,946 bytes.

Each request takes about 250ms, so under perfect conditions, a single thread sending GET requests constantly could extract ~0.402 GB per hour. At the cheapest pricing, that’s $0.02008 per hour. Maxing out the account’s Lambda concurrency using 1,000+ threads, that’s $20.08 an hour, or $14,939 in a standard 744 hour AWS-month.

Hang on… exec lets us run abritrary commands with arbritrary output, so we can control how much data it returns. Running cat /var/runtime/*.** six times returns just shy of 1 MB of data.

Aside: Lambda can usually return up to 6 MB, but

child_processseems to throw an error after 1 MB.

cat /var/runtime/*.** && cat /var/runtime/*.** && cat /var/runtime/*.** && cat /var/runtime/*.** && cat /var/runtime/*.** && cat /var/runtime/*.**The execution time fluctuates quite a bit since its a 128 MB function, but on average its about 3 seconds to egress 986 KB. Running that repeatedly for an hour would egress about 1.1284 GB. exec's concurrency allows for 10 threads, so that’s 11.284 GB per hour, or $0.564 per hour (~$419 a month).

Okay, so my initial thought was right due to limits. No problem though, one could just run both.

X-Ray

Since I have exec's AWS credentials, and it has X-Ray permissions, I can call PutTraceSegments as much as I want. Beyond the free tier, X-Ray traces cost $5.00 per 1 million traces recorded ($0.000005 per trace). That’s 25 times the cost of a function invocation.

By default, the X-Ray SDK records the first request each second, and five percent of any additional requests.

To keep things simple, lets assume X-Ray is configured with its defaults. That means ~1 in every 20 requests would be sampled. As far as I can see, X-Ray doesn’t have any upper limit on the number of requests per second, so I’m not sure how to put a cost on this. Perhaps there is no upper bound?

CloudWatch Logs

Next we have CloudWatch Logs. Log groups and streams aren’t billed, but the obvious spend attack is to write heaps of log events. Again, we have exec's credentials, so we’re not limited by Lambda timeouts, performance, or concurrency; just account limits.

5 requests per log stream and 800 or 1,500 transactions per second per region (depending on the region). That means we need 160 to 300 log streams in to max out a region. The quota for creating new log streams is 50 per second per region, so that wouldn’t be a problem.

This means we can call PutLogEvents 800/1,500 times a second per region. The per region is important because exec's execution role is allowed to use these CloudWatch Logs in any region. There are currently 17 regions that don’t require opt-in (and 5 that do). 3 of them allow 1,500 TPS and the rest allow 800. In total, that’s a whopping 15,700.

Each of those requests can have up to 1 MB of data, giving us a total data ingestion of 15.33 GB/s.

Log data ingestion cost depends on the region, ranging from $0.50 per GB in us-east-1 (N. Virginia) to $0.90 per GB in sa-east-1 (São Paulo). Time for a table (that is, apparently I have time to make a table).

$9.57 per second is $25,628,460 in a 744-hour month. That’s the sort of number that makes me think I calculated something wrong. Then again, this is a month of saturating an account’s logging globally, and CloudWatch is known to be a quite expensive at scale.

CloudWatch Metrics

I haven’t mentioned cloudwatch:PutMetricData until now because we haven’t seen any evidence that the role has this permission. As crazy as it sounds, we don’t need that permission to create metrics thanks to the Embedded Metric Format (EMF) launched in November 2019.

EMF is enabled by setting the x-amzn-logs-format HTTP header to json/emf when calling PutLogEvents. AWS Lambda does this for you by default.

From there, you simply log JSON blobs like this example from the docs:

{

"_aws": {

"Timestamp": 1574109732004,

"CloudWatchMetrics": [

{

"Namespace": "lambda-function-metrics",

"Dimensions": [["functionVersion"]],

"Metrics": [

{

"Name": "time",

"Unit": "Milliseconds"

}

]

}

]

},

"functionVersion": "$LATEST",

"time": 100,

"requestId": "989ffbf8-9ace-4817-a57c-e4dd734019ee"

}EMF lets you create “100 metrics per log event and 9 dimensions per metric”. So what data do we put in the 15,700 logging requests per second? A heap of metrics, of course!

Each call to PutLogEvents can have 1,048,576 bytes (1 MB) split between up to 10,000 log events. That would be 104 bytes per log event, but assuming UTF-8, the example metric above would be 213 bytes (minified). Let’s be generous and call it 250 bytes which gives us about 3,500 metrics per PutLogEvents call.

All custom metrics charges are prorated by the hour and metered only when you send metrics to CloudWatch.

This means that each time we send a new metric, assuming we give it a unique name (like a UUID), it we will be billed for 1 hour.

Custom metric pricing is conveniently the same across all regions.

Time to calculate. We can do 15,700 PutLogEvents calls per second globally. Each one can have ~3,500 metrics; that’s 54,950,000 new metrics a second and they all get billed for an hour each. Using at the cheapest pricing tier results in $1,477 per second, or $1,099,000 a month.

Bonus: S3

The “Issues Found” table contains another spend attack found by Benjamin Manns. I think it’s worth a mention here too.

Benjamin’s attack involves creating an S3 bucket and enabling Requester Pays.

In general, bucket owners pay for all Amazon S3 storage and data transfer costs that are associated with their bucket. However, you can configure a bucket to be a Requester Pays bucket. With Requester Pays buckets, the requester instead of the bucket owner pays the cost of the request and the data download from the bucket.

The files could be arbitrarily large and the requests could be frequent, leading to an unbounded cost.

This would be useful for anyone who is looking to transfer data out of AWS on your dime. E.g. someone wants to move from AWS to GCP and needs to transfer a petabyte of archived to another cloud. Or, perhaps someone wants anonymous (and free) access to something only available via requester-pays.

I didn’t test this one, but it sounds plausible and definitely something to be aware of.

Conclusion

Expensive is a subjective measure. The numbers in this post may not bother someone like Elon Musk, but for the rest of us there are important lessons to remember.

- Do what you can to keep even the most basic credentials secure.

- Sanitize user inputs and outputs to avoid leaking credentials.

- Ensure you have billing alarms configured to catch anything like this ASAP.

- Follow the principle of least privilege (remove unnecessary permissions and don’t use

"*"as a resource in an IAM policy). - Apply throttling and limits where possible.

- Be aware of all regions, not just the one you expect resources to exist in.

This was a fun investigation which revealed some very real problems. Please let me know if you can think of more, or if my calculations are wrong!

Disclaimer: I don’t endorse anyone performing anything malicious based on this post. This post is for educational purposes only.

For more like this, please follow me on Medium, Twitter, and Bluesky.